Case Studies

RUSH Network Portal

While working for WIRUlink from 2019 to 2022, I led the development of the RUSH Network Portal: a platform through which Internet Service Providers (ISPs) can white label and sell fixed wireless internet products on the largest wireless infrastructure in South Africa.

I gained tremendous experience working on a single large-scale project for just under 3 years. Working directly with the CTO, a diverse development team, physical network specialists and the sales team, I leveraged Laravel for a deep dive into backend programming, systems design, security, UX/UI, as well as creative solutions to marry the old school with the new.

Solutions

Since the RUSH Network caters for both small-scale and large ISPs, we had to create solutions for both.

The first priority was to get a frontend with as much necessary functionality as possible to the market. The user experience should be intuitive and make it easy for small ISPs to use in conjuction with their existing processes, which were often quite unsophisticated. Some were startups with Wireless as their only product.

On the other hand, large ISPs sell products from various service providers all over the country, especially in the fibre market. These ISPs need to integrate the Portal’s functionality into their existing systems, usually through an API.

UX/UI

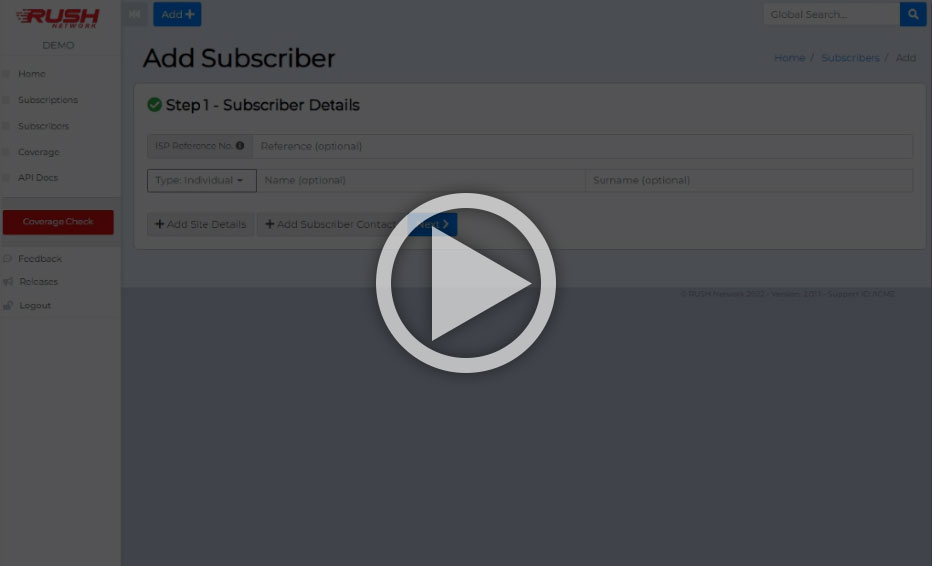

(Please note that the examples below use bare-bones styling, which was intended to facilitate white-labelling.)

We decided to keep things simple at first, using only Laravel Blade templates for the frontend, with good old Bootstrap 4 and jQuery – something all our developers were familiar with. I also wrote a Javascript library for reactivity, which you can read about here.

We took care to simplify user interactions. For instance, creating a new subscriber (e.g. an ISP’s customer) can be a daunting task. First, the sales person must do what’s called a Coverage Check, which is checking whether there’s a line of sight with one of our towers from the customer’s premises (called a Site). Additionally, the customer’s details must be captured, the installation address confirmed, and the available products ordered.

Salespeople and small ISPs do these steps in varying order. In fact, sometimes steps are skipped completely until later, so we had to find a simple way to cater to everyone.

In the example below, you can see that almost everything is optional, and the main sections are activated with a single click. Form validation is done in real-time and success/warning icons are displayed in the toggle buttons as the forms are filled out.

Coverage checks can be done directly in the same window, or can be selected from existing checks. (In the below example, the coverage check is abandoned, and the user selects an existing one from a list instead). Contact details can be added in a similar way, and once a subscriber is created, the user can always come back to add or edit the details to commence installation.

(This reactivity was accomplished by the library I wrote about here.)

Complexity

The system quickly grew in sophistication. Although at first the platform was only intended for sales, the company saw the potential of expanding it to help automate many internal processes that still required manual intervention, as well as to empower ISPs to do first level support for their own clients, instead of having to wait for support from us.

This added functionality included:

- A secure API with practically all of the Portal’s functionality available, with 100% functional Swagger documentation

- Automatically billing the ISPs according to each subscriber’s data usage and subscription plans, with detailed reporting available

- Provisioning devices on physical installation (i.e. automatically setting up the devices and connecting them to the network)

- Monitoring data usage in real-time

- Debugging devices, including signal strength, pinging and rebooting

- Upgrading, downgrading and cancelling subscription plans

- Running promotions, which can be different per ISP

- Moving and/or replacing devices

- Generating customised KML files for coverage maps

We also wanted to make it as easy as possible for ISPs to white label and sell the products directly on their own websites, which could be a challenge for small ISPs without web developers. This included a JavaScript plugin for doing coverage checks, as well as a “shopfront” that could easily be embedded into a web page, with default styles that can be overridden.

(You can see an example of the shopfront and coverage plugin here that includes offerings from multiple ISPs.)

Modular

There is an interdependence among many of these areas, so I had to find a way to break it down into self-contained units that can interact without side effects.

First of all, I created a standardised way to pass information to-and-from the controllers, using Laravel’s built-in MessageBag class and custom traits. And to keep the controllers lean, I kept the logic for handling each request in separate classes. That meant we could reuse code, regardless of whether a user was viewing the frontend or calling an API endpoint. Changes could be made in one place without duplication.

Secondly, I used distinct classes for as much of the features as possible. For instance, if you need to create a new subscriber, you don’t have some bloated Subscriber class, of which you call a create() method. Rather, there’s a class that specifically creates subscribers, called SubscriberCreator and you call the SubscriberCreator->create() method. Want to update a subscriber? Well, there’s a class for that too; and you can expect it to return errors and/or messages in a standard way, that can be passed directly back to the view, or used within a calling class to decide what to do next.

(I want to give my thanks and the credit to my colleague Chris Kotze for guiding me in this direction.)

Test Driven

As the codebase grew, changes in one area could easily cause unwanted side effects somewhere else. Therefore, I opted to write detailed unit and feature tests.

However, I didn’t always use strict TDD. It was only necessary to write tests before development on heavily transactional things like placing orders, user management, billing, security and API calls.

Other tests were written in response to new features or changes in other parts of the codebase that could affect functionality in various places. Nonetheless I still managed to write hundreds of tests to make sure the system ran smoothly.

It was a mission to do, but the certainty it gave us and the speed at which we could debug side effects made it worth every bit of effort. And there are few things as satisfying as seeing all tests pass when you made a change, or see a test of another area of the code catch a bug caused by your current change.

Secure

We took privacy and security very seriously.

I used custom scopes that altered queries before execution to only return data linked to the current user, which filtered by their role and permissions.

All non-public routes were secured by custom gates and middleware.

For the API, users could be assigned permissions per endpoint, which was handled by custom gates and expiring tokens. Rate limiting could also be set per user on each endpoint (with a default applied).

Conclusion

I’m very proud that I could work on this project. It had plenty of moving parts and proved to be very challenging to fit everything together, but that’s part of the excitement and learning experience.

I’m happy that I could leave the team with a solid product that did what it was intended to do, and with quality code that is easy to maintain. I always say that part of my job is to make myself redundant. I guess that’s the best we can do as programmers :)